Will data scientists become obsolete in the next 10 years?

Will tech companies still need data scientists, or are they destined to become just another blip in the history books – disappearing from the world like film projectionists and bowling alley pinsetters before them?

A couple of decades ago, to set up an online web service you’d have have to buy physical servers and colocate them somewhere. It took planning, effort, upfront capital, lead times and physical access.

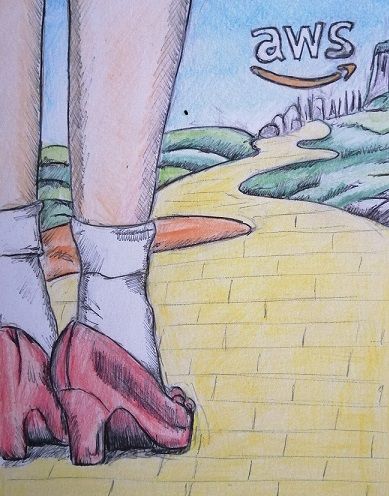

Now, with three clicks of your mouse/heels, you can summon 20 cloud servers to answer your bidding instantly.

And just a few years ago, to set up a machine learning service, your data scientists would have to laboriously work through a morass of preprocessing, parameterisation and acceptance testing. It took planning, effort, lead times, salaries, datasets, computation, and a tolerance for risk.

What about now? Are we nearing ‘three clicks of our heels’ for machine learning services too? And, if so, does this mean in-house data scientists will become a thing of the past?

The 80:20 machine learning solution

Let’s consider a concrete example. Say you want to automatically assign tags to new blog posts. We’ll assume that the set of tags have already been decided, and that you have a number of blog posts which have already been tagged manually.

Option #1) Well, you can always do it by hand and avoid machine learning altogether. Clients are sometimes surprised when I recommend this to them, but it’s often the most cost-effective option - the costs of developing, deploying and maintaining an algorithmic option only pay for themselves at pretty large scale, and you avoid a lot of risk this way.

For this example, let’s say we’re at large scale and that it’s not feasible for humans to do things manually.

Option #2) You can train and deploy a custom machine learning model yourself. This is probably the right approach if:

- Performance on this task is a key differentiator or core to your business, and you’re trying to push the state-of-the-art.

- You have special requirements (e.g. you need to solve an unusual problem or with unusual constraints, or you need access to the innards of the model to understand how it’s working).

But in practice, these requirements are quite rare. Most business data science teams are looking for an 80:20 solution - that is, they’d rather have a decent solution that they can deploy quickly and cheaply, rather than a state-of-the-art solution at great expense. Certainly that’s what most managers of business data science teams want!

Option #3) For these cases, the smart move is to wrap an off-the-shelf cloud solution.

Our blog/text classification problem, for instance, has already been commoditised. You can give AWS Comprehend Custom Classification a few example documents for each category hand-labelled with your tags, and it can generalise from them. So now all your new blog posts would automatically be tagged fairly reliably. Hurray!

Solutions like these offer the promise of reducing data science to rote engineering – you just pay Amazon some money and call the API.

So there’s no further need for expensive data scientists – you can just hire a few software engineers to call the right APIs, and let the money roll in, right?

Why companies still need data scientists, both now and in the future

Indeed, this was more or less my thinking when I started working with a client company to deploy a document-tagging solution just like this. I figured that it would be mostly a software engineering effort with minimal need for data science expertise.

That experience and others have reminded me how things are more complicated than that – that even in cases like this, there are still a number of ways in which data scientists are not just valuable, but invaluable, to a business solving these kinds of problems.

1. To choose the right problems to solve, and frame them in the right way

First of all, you need someone to notice that there’s a potential, high-value, feasible opportunity for the effective use of machine learning or data. Occasionally, people in the business will flag a business process that could be automated, or a revenue stream that be generated by a Data Product.

But this is the exception, in my experience. In practice, one of the key jobs for a data science team within a larger company is to be on the lookout for these kinds of opportunities, and to push to make them a reality. (How to do that well is another story!)

And even once you’ve identified a potential, high-value, feasible opportunity, you still need to frame it in the right way.

In the case of our tagging problem, it’s possible that it would make more sense to reframe it from a classification problem into an information retrieval problem, or a clustering problem, or a reinforcement learning problem, depending on the context...

Finally, you need to shortlist and choose between the various machine learning service providers, to find one that offers a good solution for the specifics of your problem and business. That could take into account factors ranging from the quality of the documentation and API, to the nature of the algorithm they’re using, to the kinds of data you have at your disposal, to their reputation in the data science community.

Example questions:

- What do people spend a lot of time on that could realistically be automated by an algorithm? What could an algorithm do that we don’t currently offer, that would be of value to customers? How much would that be worth to the business?

- Which class of algorithms would be a good for for this? How hard would a solution be to build? How well should we expect that to work? How good is the state-of-the-art in machine learning at this problem?

- What form does the data exist in now? How clean is it? How easy would it be to rejig it into the form we’d need?

- How expensive in money or time will it be to run this service?

2. To evaluate how well things are going

This is the very most important step. You need to evaluate how well things are working. You’ll need to separate training and test sets, decide on the right evaluation metric, agree on an acceptable performance threshold, run AB tests (aka ‘champion challenger tests’) to compare alternative approaches, and explain the results to the rest of the business. This is where your data scientists shine.

Example questions:

- How well should we expect to be able to do?

- Do some errors matter more than others?

- Do our sanity checks pass, e.g. chance performance on scrambled data?

- Are there edge cases, biases or other potential pitfalls that we need to look out for?

3. To improve performance

What if the algorithm performance isn’t good enough? What if performance in the real world is worse than expected from your early tests? What if performance worsens over time?

Example questions:

- Is that because of a problem with our data (is it our features? our labels? Is it confined to just a few samples? bad data hygiene? unrepresentative sampling?)?

- Perhaps there’s just not enough signal in the data?

- Is it overfitting, or underfitting?

- Is it something weird about the particular algorithm being used by the black box commoditised service? What if you call the service differently, or try a competitor service?

- Can we visualise what’s going on?

- How does it behave with various kinds of synthetic data?

- How have the data or the problem changed over time?

- Could you improve performance from your side? What if you change the data you feed in (e.g. preprocess it differently, or only feed in the high-quality samples/features)? What if you had more training data? Can you augment the data with synthetic data?

Your data scientists can help you answer these questions, and many more.

Which is to say, you need at least some data science expertise to choose and define the right problems, and to be confident that things are working well. And you will need much more data science expertise if you want to understand why things aren’t working and try to improve them.

Put simply, you’ll still need data scientists.

What the future of data science means for you

I started writing this piece assuming that I’d end up arguing that most data science problems could be mostly reduced to engineering problems. I’d expected to conclude that in a few years’ time, just a couple of data scientists – with support from data engineering – could indeed be pretty effective at stitching together commoditised ML services for a range of 80:20 solutions.

And maybe, for a well-studied problem and a decent-sized, clean, well-labelled, non-changing dataset, maybe things will work out-of-the-box a good deal of the time. This is the “Dorothy, click your heels three times” territory. But somehow, in the real world things are never so ideal.

So, on that basis, should we expect a continuation of the current trend, where every business seems to be developing an in-house data science team?

I see a number of potential futures. Perhaps the best of the commoditised services will differentiate themselves by offering comprehensive and comprehensible sanity-checking, visualisation, monitoring and diagnostics. Alternatively, maybe they’ll offer some sporadic high-touch hand-holding from their in-house data scientists, to support client engineering teams.

Or perhaps the definition of a full-stack engineer will expand to include core data science best practices. Nowadays, most software engineers are alert to warning flags like copied and pasted code, unnormalised SQL tables, or using html tables for layout. Perhaps in future they’ll also be trained to look out for peeking, overfitting, unbalanced labels, misleading metrics, non-stationary data, the brittleness of a model tied to the output of another model, and so on.

The only conclusion that seems clear is that data scientists will spend less time trying out a variety of algorithms and parameters in an effort to tune their models. Just as we would almost always prefer to use an open source algorithm framework (e.g. something from Scikit-learn or PyTorch) than implement an algorithm from scratch, data scientists will soon prefer to build on top of a 3rd-party ML service (especially if it has a nice, big pretrained model).

Which will mean fewer days spent waiting for big models to train and no more endless grid searches! But still plenty to do in designing, evaluation, probing, and improving the approach – meaning data scientists can be more productive, scale their work faster, and be even more valuable to a business.

To sum it up:

- Even as the best 3rd-party ML services continue to add more features and support, businesses will still need at least some data science expertise to choose and define the right problems to solve, and to be confident that things are working well. If they want to understand why things aren’t working, and to improve them, they'll need much more data science expertise.

- It’s possible that the role of a full-stack engineer could expand to include a deeper understanding of core data science best practices. Even so, data scientists will still be needed. Since they’ll likely be building on top of 3rd-party ML services, they’ll spend less time tuning their own models, and more time designing, evaluating, probing, and improving the selected approach.